1 Introduction

Understanding the mind is ultimately about understanding a dynamic system comprised of a large set of elements that interact in complex ways. If we had designed the mind, things would be easier: Like a car, a computer, or some other piece of modern machinery, we would have built different parts for different functions and stuck them together in a way that would make it easy for us to repair and reconfigure the system. In reality, things are not so simple. Because the mind is not human-engineered (or really “engineered” at all!), for us mere humans to understand it requires us to reverse-engineer the mind. In other words, we have to see what the mind does in some particular situation, build a particular type of machine—a model—that we hypothesize operates like the mind does, and then put the machine in that same scenario and see whether it acts like a human mind. To the extent that our model acts like a human in that scenario, that gives us reason to believe that the model is operating in some sense “like” a human mind does in that scenario.

In the early days of scientific psychology, our tools for building models of complex dynamical systems were quite limited. We could build physical models, but these are constrained by the physical properties of the materials we use (nonetheless, it is worth noting that mechanical model of attention by Broadbent (1957) is designed in such a way that the physical properties of the model capture important psychological constraints). Purely mathematical models may not have been so strongly constrained by physicality, but when considering situations with many interacting elements or when stochastic noise is involved, mathematical models quickly become intractable (consider the insoluble “three body problem”—even with a complete model of such a system, we cannot derive its future behavior analytically). As a result, early psychology was dominated by behaviorism, which eschewed the development of theories of the mind and contented itself merely with observing and cataloging behavior.

It was not until the middle of the twentieth century, when modern computers began to become of use, that the possibility of “reverse-engineering the mind” became a reality. This was the time of the “cognitive revolution” (Neisser, 1967). The revolution came about for both technical and conceptual reasons.

From a conceptual perspective, computers offered a productive metaphor for helping us understand how the mind works. A computer uses the same physical substrate to perform different functions, similar to how the same brain lets us both speak and play piano. A computer’s adaptivity comes from the fact that the computer can run different “programs” on its hardware. A program is a set of procedures that take a set of “inputs” and transform them into “outputs”. This is analogous to how a living organism decides to act in a certain way (its “outputs”) depending on its goals and on the environment it happens to be in (its “inputs”). Meanwhile, the procedures that transform a computer’s inputs into outputs often involve intermediate steps that do not themselves produce observable behavior but which are nonetheless represented by changes in the internal state of the computer. These internal states are analogous to thoughts or beliefs in that they may not be externally observable, but they are critical steps on the path toward taking an action. The computer metaphor thus enables us to understand cognition in terms of how internal states of mind represent aspects of an organism’s environment, goals, and thoughts in such a way that these representations can be processed to yield behavior that is appropriate to the situation the organism is in.

From a technical perspective, computers offer a way to derive predictions from complex models that would not have been tractable otherwise. As we shall see, this is particularly valuable for two applications: First, we can use the computer to simulate what a model would do and thereby understand the distribution of possible actions it can take. This obviates the need to derive predictions through mathematical analysis or logic, which though powerful, can only be applied to simple models. Second, we can use the computer to fit a model to data. Almost all models have parameters which can be thought of as “knobs” or “settings” that adjust the kind of behavior the model produces. To “fit” a model means to find the parameters for that model that get it to generate behavior that is as close as possible to the behavior recorded in a dataset. Except for very simple models, it is impossible (or at least very impractical) to try to fit them to data without a computer. But as we shall see, fitting a model is useful because we can infer from the best-fitting parameters something about the person who produced the data to which the model was fit.

In summary, computers made it feasible for cognitive psychologists to “reverse-engineer” the mind because they (a) provided a valuable conceptual metaphor that allowed theories of cognition to be posed in the form of computational models comprised of internal representations and processes applied to those representations; (b) enabled predictions to be derived for models that were complex and/or had stochastic elements; and (c) enabled those same kinds of models to be “fit” to data so that model parameters can give insight into how a person performed the task for which data was recorded.

There is an important difference between “reverse-engineering” a natural system, like the mind, from reverse-engineering a human-designed system like a car. Because a natural system was not “engineered”, the models we devise are not guaranteed to work the same way as a natural system, even if the model accurately mimicks the behavior of the natural system in the cases we study. The purpose in “reverse-engineering” the mind is not to build a duplicate mind, it is instead to “translate” a complex system into a form that enables us to understand it better. The model is a deliberate simplification which we expect to deviate from reality in many ways. What we hope is that we arrive at a model that helps us understand the key features of a natural system well enough for us to understand why it acts the way it does in specific situations (for further discussion of the purposes of models in psychology, see Cox & Shiffrin, 2024; Singmann et al., 2022).

1.1 Some functions of models

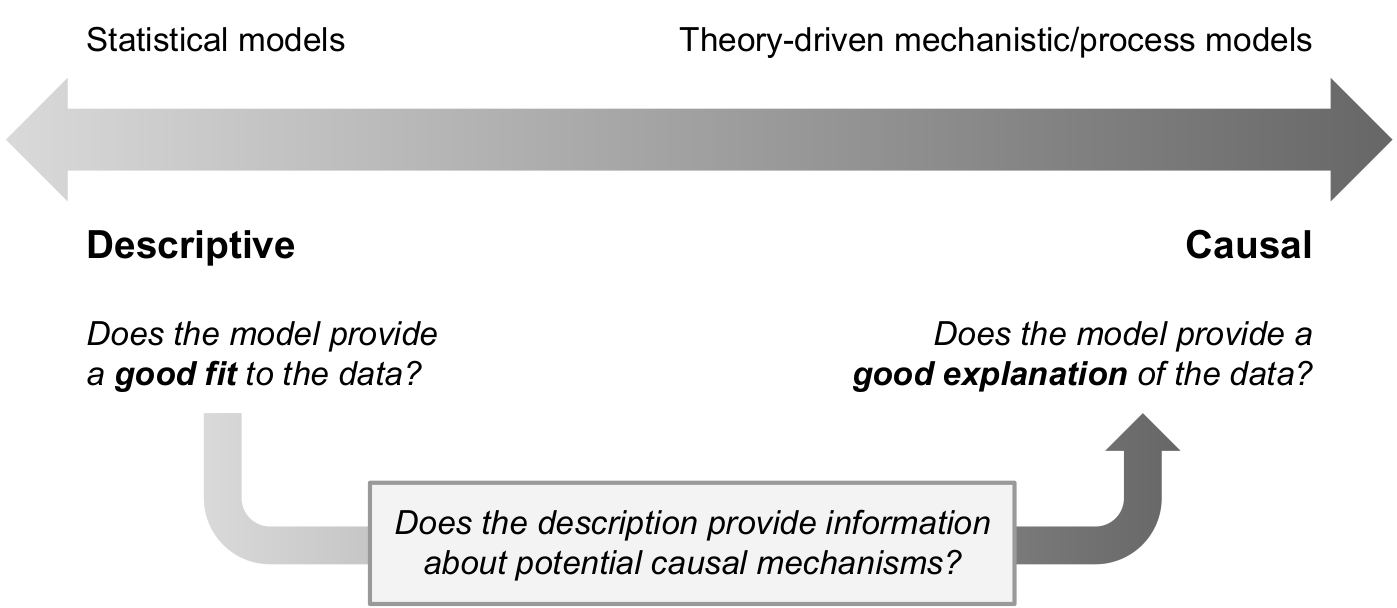

As Cox & Shiffrin (2024) describe, a computational cognitive model falls on the “causal” end of the spectrum in the graph shown at the top. They enumerate a couple of goals that such a model might serve:

- All models are wrong, but causal models are useful when they capture and approximate the causal processes at work and when they generalize to other settings. When modeling complex human behavior, all the models are at best crude approximations to reality, but nonetheless they can prove useful by describing the processes that are most important for explaining behavior.

- Modeling allows precise communication among scientists. When theories and hypotheses are proposed verbally and heuristically, their precise meaning is not generally known even to the proposers, and they are often misinterpreted and misunderstood by others. When theoretical constructs are clearly linked to elements of a model and the internal workings of the model are described with mathematical or computational precision (e.g., by including the code running the model simulation), other scientists can use, generalize, and test the model because its application in at least its original domain is well specified.

- Models make clear the links between assumptions and predictions. Different investigators cannot dispute the predictions of the model that are produced by a given set of parameters (the parameters generally index different quantitative variants of a given class of models).

- The formal specification of the model clarifies the nature of proposed internal cognitive processes. A poor modeler may fail to demonstrate that linkage. A good modeler will explore the parameter space and show how the parameter values change the predictions and how narrow or broad a range of outcomes can be predicted by changes in the parameter values. A good modeler will also explore alterations in the model, perhaps by deleting some processes or by adding others, or by fixing some parameter values at certain values, thus providing diagnostic information about the qualitative features of the outcome space that are primarily due to a process controlled by particular parameters. A bad modeler might claim a fit to data provides support for an underlying causal theory when in fact the fit is primarily due to some parameter or theory not conceptually related to the claim.

- Constructing a model can act as an “intuition pump” (cf. Dennett, 1980). Many modelers try to infer underlying cognitive processes from complex data sets that involve multiple measures (e.g., accuracy and response time) and many conditions which may be difficult or impossible to summarize adequately. Modelers form hypotheses about the processes involved based on the data and their prior knowledge. It is often the case that intuiting predictions for different combinations of processes is not possible due to the limitations of human cognition. Thus, different combinations of processes are instantiated as formal models, enabling a modeler to observe and test the predictions of their hypotheses. In an iterative model building process, the failures in each iteration indicate the way forward to an appropriate account.

- Modeling allows different hypothesis classes to be compared, both because the predictions of each are well specified and because model comparison techniques take into account model complexity. The issue of complexity is itself quite complex.

- One issue is due to statistical noise produced by limited data. A flexible model with many parameters can produce what seems to be a good fit with parameter values that explain the noise in the data rather than the underlying processes. The best model comparison techniques penalize models appropriately for extra complexity. However, models are in most cases developed after observing the data, and they are used to explain the patterns observed. To do so, they often incorporate assumptions that are critical but not explicitly parameterized. It thus becomes a difficult and subtle matter to assess complexity adequately. A secondary problem with using fit to compare models is the fact that the most principled ways to control for complexity, such as Bayesian model selection and minimum description length, are difficult to implement computationally and are often replaced by approximations such as the Akaike or Bayes/Schwartz information criteria that often fail to account for key aspects of model complexity.

- Simpler models are also favored for other reasons. A chief one is limited human reasoning: As a model becomes more complex, it becomes more difficult for a human to understand how it works. Simpler models are also favored when analytic predictions can be derived (thereby greatly reducing computational costs) and for qualitative reasons such as “elegance”.

- Simpler models are particularly favored when their core processes generalize well across many tasks.

- There exists a danger that the modeler will mistake small quantitative differences in “fit” for the important differences among models—differences that generally lie in qualitative patterns of predictions. Knowing one model provides a better fit to a limited set of observations from a narrow experimental setting is not often useful. For example, consider a model that correctly predicts the relative levels of recall accuracy observed across conditions in a given experiment but quantitatively overpredicts the accuracy in a single condition. Meanwhile, another model perfectly predicts the accuracy in that condition but fails to predict the right qualitative pattern of accuracy across conditions. We argue that the qualitatively correct prediction is a reason to favor the first model, even though it might yield a worse quantitative fit. Correct qualitative predictions across conditions are often a sign that a model captures an important and generalizable causal process.

1.2 The importance of simulation

The models that we will be covering are models that simulate behavioral (and maybe even neural) outcomes. Because we are building models of a complex system—namely, the mind—our models can also become complex. Therefore, understanding what kind of behavior a model produces may require us to simulate behavior from that model. This will also help us to understand the relationship between the model’s parameters and its behavior. By simulating how behavior changes as one or more parameters change, we can understand the role played by the theoretical construct represented by that parameter.

For example, we might have a parameter that represents the quality or precision with which an event is stored in memory. In a model where this memory representation is used to yield behavior, we can systematically adjust the value of the quality/precision parameter and observe the effect this has on the model’s simulated behavior. Again, because we are dealing with models that can grow quite complex, we may even be surprised by the behavior the model produces!

Two analogies may help give some intuition about the value of simulation: If we are cooking, often the only way to know how a particular combination of spices will taste is to actually combine them and taste. Model parameters are like the different amounts of each spice, with the final taste being analogous to the model’s simulated behavior. Alternatively, you can think of model parameters as being like characters in an improv sketch. The characters have different backgrounds and goals which dictate how they will interact and how the story will develop. The background and goals of the characters are like the parameters of a model, with the resulting performance analogous to the model’s predicted behavior.

1.3 The importance of fitting data

“Fitting” a model to data means finding the combination of parameter values for which the model produces behavior that most closely resembles that produced by a participant in some task. The value of doing this is that it helps us understand why that participant acted the way they did.

For example, we might want to know whether someone was fast because they were able to quickly accumulate the evidence they needed, because they were uncautious, because they were biased, or because they could execute motor actions quickly. We can address that question by finding the values of the parameters associated with each construct that best fit their observed performance.